Mix2Morph: Learning Sound Morphing from Noisy Mixes

Annie Chu1,2, Hugo Flores-García2, Oriol Nieto1, Justin Salamon1, Bryan Pardo2, Prem Seetharaman1

1Adobe Research; 2Northwestern University

Abstract

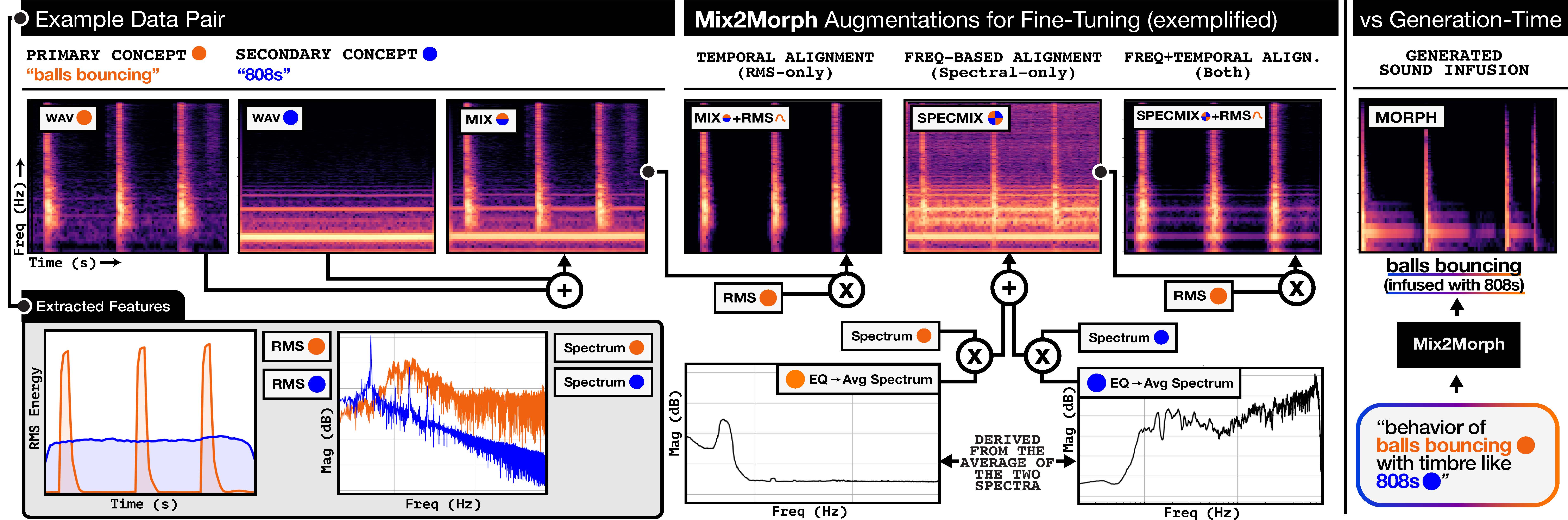

We introduce Mix2Morph, a text-to-audio diffusion model fine-tuned to perform sound morphing without a dedicated dataset of morphs. By finetuning on noisy surrogate mixes at higher diffusion timesteps, Mix2Morph yields stable, perceptually coherent morphs that convincingly integrate qualities of both sources. We specifically target sound infusions, a practically and perceptually motivated subclass of morphing in which one sound acts as the dominant primary source, providing overall temporal and structural behavior, while a secondary sound is infused throughout, enriching its timbral and textural qualities. Objective evaluations and listening tests show that Mix2Morph outperforms prior baselines and produces high-quality sound infusions across diverse categories, representing a step toward more controllable and concept-driven tools for sound design.

Mix2Morph Fig 1. Audio Examples (headphones recommended)

| wav1 : balls bouncing | wav2: 808s (bass) | None (Simple Mix) | +RMS-only | +Spectral-only | +Both | Generated Infusion |

|---|---|---|---|---|---|---|

Listening Examples (headphones recommended)

Prompt Template >> "behavior of [primary source] with timbre like [secondary source]"

Samples pulled from listening study: Copy of listening study administered here

Mix2Morph: Most Convincing

Listener Likert Ratings below audio (if applicable)

| Infusion Prompt | Mix2Morph (ours) | LGrS | MorphFader | Simple Mixing | SoundMorpher | Base Model |

|---|---|---|---|---|---|---|

| behavior of monster growling with timbre like motorcycle revving | 4.6/5 | 2.1/5 | 2.0/5 | 1.9/5 | – | – |

| behavior of water dripping with timbre like cowbell clang | 4.3/5 | 2.5/5 | 1.1/5 | 3.0/5 | – | – |

| behavior of frog croaking with timbre like ratchet crank | 4.2/5 | 2.2/5 | 1.9/5 | 3.5/5 | – | – |

| behavior of tennis ball being served with timbre like xylophone hit | 4.2/5 | 1.7/5 | 1.4/5 | 3.2/5 | – | – |

| behavior of dog barking with timbre like car horn | 4.12/5 | 1.3/5 | 1.5/5 | 2.8/5 | – | – |

| behavior of electric buzz with timbre like bee swarm | 4.0/5 | 2.1/5 | 3.0/5 | 3.7/5 | – | – |

| behavior of door creaking with timbre like zombie groan | 4.0/5 | 1.4/5 | 2.3/5 | 3.5/5 | – | – |

| behavior of phone ringing with timbre like pigeon cooing | 3.9/5 | 1.8/5 | 1.6/5 | 2.7/5 | – | – |

Mix2Morph: Least Convincing

Listener Likert Ratings below audio (if applicable)

| Infusion Prompt | Mix2Morph (ours) | LGrS | MorphFader | Simple Mixing | SoundMorpher | Base Model |

|---|---|---|---|---|---|---|

| behavior of car tire screech with timbre like hawk screech | 2.2/5 ⚠️ Failure case: collapses to single-concept | 3.0/5 | 1.4/5 | 3.5/5 | – | – |

| behavior of cow mooing with timbre like horse neighing | 2.5/5 ⚠️ Failure case: sounds like a dynamic morph over a static infusion | 2.0/5 | 2.2/5 | 2.9/5 | – | – |

| behavior of boot steps with timbre like metallic clank on metal | 2.6/5 | 1.9/5 | 1.1/5 | 2.8/5 | – | – |

| behavior of swords clashing with timbre like snare drum hits | 2.9/5 | 1.7/5 | 1.3/5 | 3.6/5 | – | – |

| behavior of hammering into a door with timbre like a marimba | 2.9/5 ⚠️ Failure case: sounds like drums, not marimba (right direction, but not enough to be perceptibly marimba) | 2.4/5 | 1.8/5 | 3.0/5 | – | – |

Note: If having trouble viewing samples, please click here for backup link.

Ethics & Positionality Statement

About: This project is situated within a framework of care toward creative practitioners, with the aim of supporting sound designers in exploring new modes of sonic creativity. Our goal is to develop tools that facilitate sound transformation, shaping, and adaptation tasks that remain challenging to accomplish using existing methods. One such challenge is convincingly blending sound concepts together, leading us to the focus on sound infusions. We acknowledge that the current Mix2Morph system primarily enables the generation of static sound infusions, which limits its immediate applicability in interactive design contexts, and thus is not yet suitable for direct creative deployment. We also recognize that the present reliance on a text-based input modality may not align with the established practices of sound designers, whose workflows are typically grounded in auditory feedback and embodied listening (Kamath et al., 2024). Accordingly, while this work focuses on establishing foundational functionality, we encourage future research to prioritize controllability and real-time interactivity to enhance usability and creative agency; and similarly may leverage participatory and co-design approaches to better align tool affordances with the needs and intuitions of creative users.

Training Data: All data used for training and evaluation are licensed and ethically sourced, drawing exclusively from publicly available, CC–licensed datasets and selected licensed proprietary SFX datasets.